So who caught the Jeopardy! IBM "Man vs. Machine" Challenge that aired for Feb. 14th, 15th, and 16th? It should be, like, required viewing for all nerds, trivia geeks, etc. I mean, I like Jeopardy, and trivia, on a normal basis, but the IBM Challenge took Jeopardy to the next level--which, apparently, includes science, as well as a little bit of what most people might consider sci-fi as well.

A little background for those who don't know anything of what this is about: for the past few years, IBM has worked on creating a computer that can answer questions by reproducing the way human beings answer questions. "Watson," the computer's name, is loaded up with a ton of data, and has algorithms that allow him to pick out the key words in the Jeopardy clue, sift through his data for possible answers, and predict how likely the terms his algorithms picked out might be the answer. The scientists' hope is that one day this sort of artificial intelligence would be beneficial to, say, doctors working in an extremely isolated area, who may not have access to medical textbooks and other informational resources, may be able to plug symptoms into the database and have the computer spit back the diagnosis and its treatment. You can watch the entire 3-day challenge through Youtube if you'd like.

Varying opinions on artificial intelligence aside, I found the whole thing so very fascinating. It was not that Watson knew more than Ken Jennings or Brad Rutter; I'm fairly certain that they were all very capable of answering the majority of the questions. I'd be curious to see how they programmed Watson to, shall we say, "buzz in" to answer. Because when it comes down to it in Jeopardy, it doesn't matter how quickly you figure out the answer to the clue so much as how fast your reflexes are. So where did the IBM scientists draw the line at Watson's reflexivity? There would be no doubt that a computer's so-called "reflexes" would be much faster than a human's hand-eye coordination. I suspect that Watson answered so many questions correctly not because he knew more than Ken or Brad, but because, with his computer reflexes, he was able to buzz in more quickly than either man. This frustrates me quite a bit because it totally messes with anyone doing scientific observations, augghhhhh!

But that is an issue for IBM to consider for the future, and not what I want to focus on here.

How, you might ask, is what Watson does any different than one of us plugging a question into Google and getting back possible answers? And that's a very good, legitimate question. The difference is in how the data is stored. The Internet as we know it right now is not raw data: instead, it's data that has already been organized into "un-break-down-able" webpages by other human beings. When you type a question such as "How many U.S. presidents had/have a daughter as their oldest child?" into Google, you'll only get an answer back if someone else has already sorted through the data and written up the answer. Otherwise, you'll notice, you'll get hits for related sites, such as "children of U.S. presidents," "geneologies of U.S. presidents," and so on. It's possible, of course, to figure out how many U.S. presidents have eldest daughters via those related sites, but that takes the usual amount of time it takes a human being to solve such data organization questions.

Lots of really smart people have, in TED talks, proposed the project of something like "Internet 2.0," in which all of the data in the world is just put out there for anyone to access and play with as they want. In such a case, typing the question "How many U.S. presidents had/have a daughter as the oldest child?" will prompt the system to sift out the keywords in the question--U.S. presidents, daughter, oldest child--and then limit the data shown to just that which fits those keywords. This is essentially how Watson works. Internet 2.0 would mean that all of this data in its pure unadulterated form would be available, and if we wanted to find an answer to something via an Internet search, we wouldn't have to rely largely on earlier people already answering those questions.

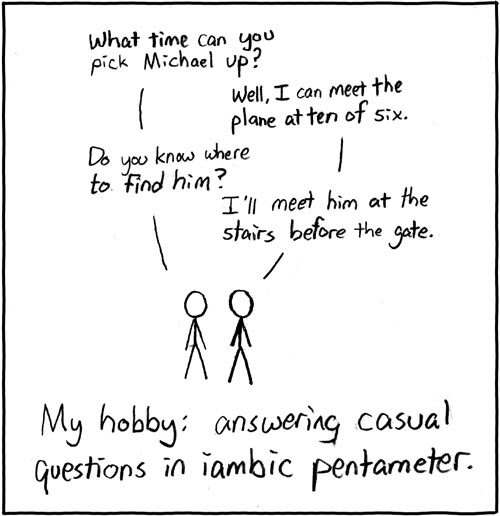

The thing is, most people don't realize until it is explained to them that the way we process language and information is NOT like Internet 2.0, but much more similar to a Google search. We humans loooove patterns: we organize information in them, make patterns out of nothing if need be, remember better if given mnemonics. We remember simple childhood nursery rhymes far better than passages of prose, because rhyme and meter organize the data of language into patterns that we have a much easier time memorizing.

|

| xkcd.com |

Artificial intelligence as it currently stands does not do this. For Watson and others, they are aware of the most common way of reciting the alphabet, but if asked to recite the alphabet in random order, they could probably do it with no much more effort than they could do it in the "traditional" way. This has both its pros and cons. On the plus side, Watson can much more easily sift through raw data and come up with brand new ways to organize it. On the downside, natural language processing is really a blindingly fast mental process, as we humans take shortcuts to get to answers much more effortlessly than if we had to go through what Watson has to go through every time we wanted to get answers. And, if you watch the Jeopardy episodes, occasionally Watson does some really stupid things that no human would do, and I'm sure the IBM scientists are now scrambling over themselves trying to figure out why oh why Watson did that. (Seriously, watch it. It only takes an hour. It's incredible.)

When a human answers questions on Jeopardy, we don't do it as Watson does, sifting through all the data we possess and ranking possible answers in order of their likelihood to be correct. I don't even know how a human does it, because I haven't taken enough linguistics or cognitive science classes. All I know is that, thus far, a computer has not been able to replicate natural language processing.

|

| onlineeducation.net |

There are some things that computers are not yet able to replicate, and one of them is the interactive experience between the reader, the text, and the world in which both of them exist.

I challenge you to think about that the next time you open a book.

No comments:

Post a Comment